Choosing Instance Type

Choosing the right instance type for your workload.

This guide will help you choose the right instance type for your workload. We'll provide general guidance, as it is impossible to provide specific instructions for every possible use case. The goal is to give you a starting point from which you can make your own benchmarks and optimizations.

For more information about engineering at scale, see our Engineering for Scale guide.

Simple workloads#

We've run a set of benchmarks using the gist-960-angular dataset. This dataset contains 1,000,000 embeddings for images, with each embedding being 960 dimensions.

We used Vecs to create a collection, upload the embeddings to a single table, and create an inner-product index for the embedding column. We then ran a series of queries to measure the performance of different instance types:

Results#

The number of vectors in gist-960-angular was cut to fit the instance size.

| Plan | CPU | Memory | Vectors | RPS | Latency Mean | Latency p95 | CPU Usage - Max % | Memory Usage - Max |

|---|---|---|---|---|---|---|---|---|

| Free | 2-core | 1 GB | 30,000 | 75 | 0.065 sec | 0.088 sec | 90% | 1 GB + 100 Mb Swap |

| Small | 2-core | 2 GB | 100,000 | 78 | 0.064 sec | 0.092 sec | 80% | 1.8 GB |

| Medium | 2-core | 4 GB | 250,000 | 58 | 0.085 sec | 0.129 sec | 90% | 3.2 GB |

| Large | 2-core | 8 GB | 500,000 | 55 | 0.088 sec | 0.140 sec | 90% | 5 GB |

The full number of vectors in gist-960-angular dataset - 1,000,000.

| Plan | CPU | Memory | Vectors | RPS | Latency Mean | Latency p95 | CPU Usage - Max % | Memory Usage - Max |

|---|---|---|---|---|---|---|---|---|

| XL | 4-core | 16 GB | 1,000,000 | 110 | 0.046 sec | 0.070 sec | 45% | 14 GB |

| 2XL | 8-core | 32 GB | 1,000,000 | 235 | 0.083 sec | 0.136 sec | 33% | 10 GB |

| 4XL | 16-core | 64 GB | 1,000,000 | 420 | 0.071 sec | 0.106 sec | 45% | 11 GB |

| 8XL | 32-core | 128 GB | 1,000,000 | 815 | 0.072 sec | 0.106 sec | 75% | 13 GB |

| 12XL | 48-core | 192 GB | 1,000,000 | 1150 | 0.052 sec | 0.078 sec | 70% | 15.5 GB |

| 16XL | 64-core | 256 GB | 1,000,000 | 1345 | 0.072 sec | 0.106 sec | 60% | 17.5 GB |

- Lists set to

Number of vectors / 1000 - Probes set to

10

note

It is possible to upload more than 1,000,000 vectors to a single table if Memory allows it (for example, 2XL instance and higher). But it will affect the performance of the queries: RPS will be lower, and latency will be higher. Scaling should be almost linear, but it is recommended to benchmark your workload to find the optimal number of vectors per table and per instance.

Methodology#

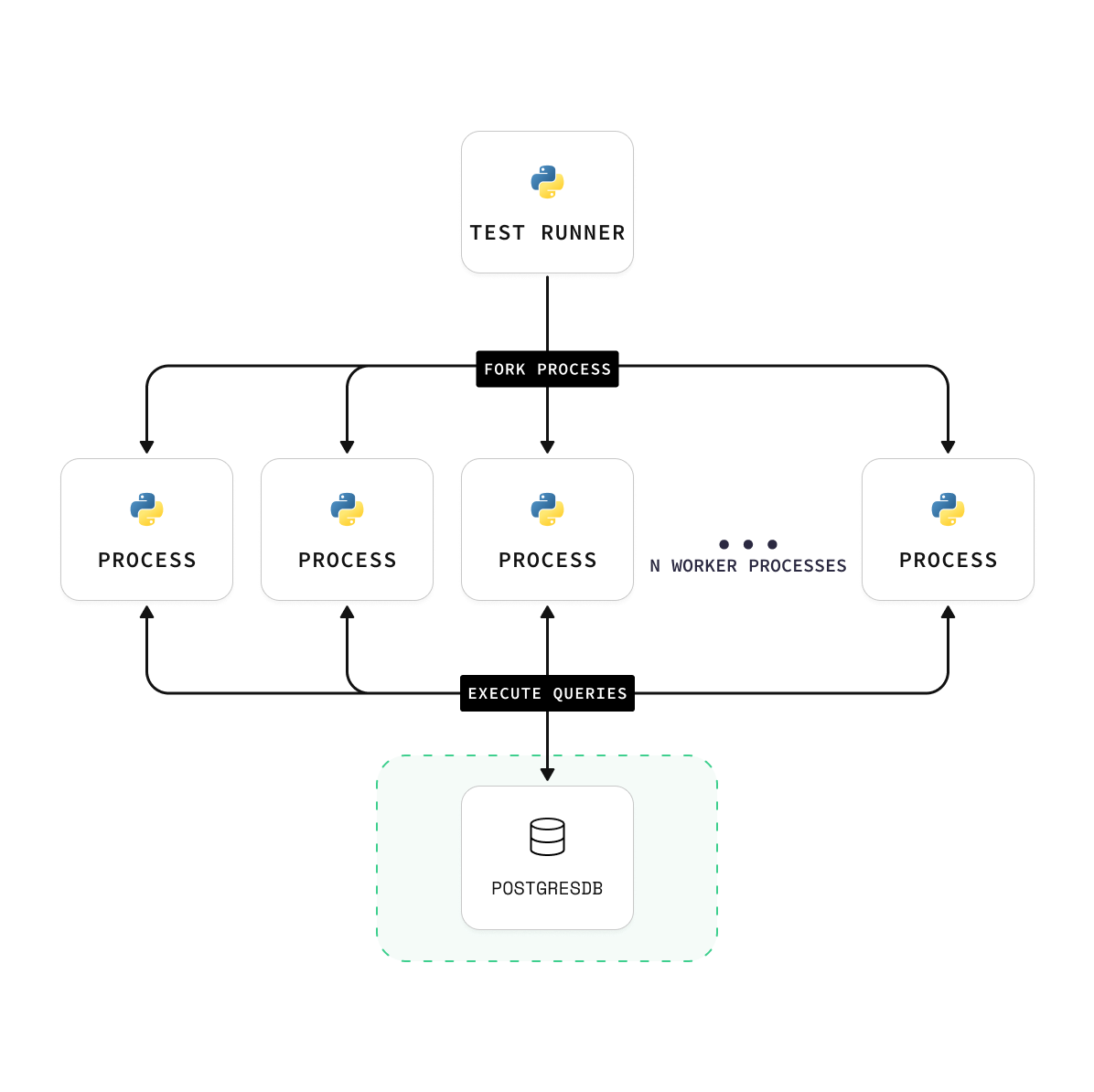

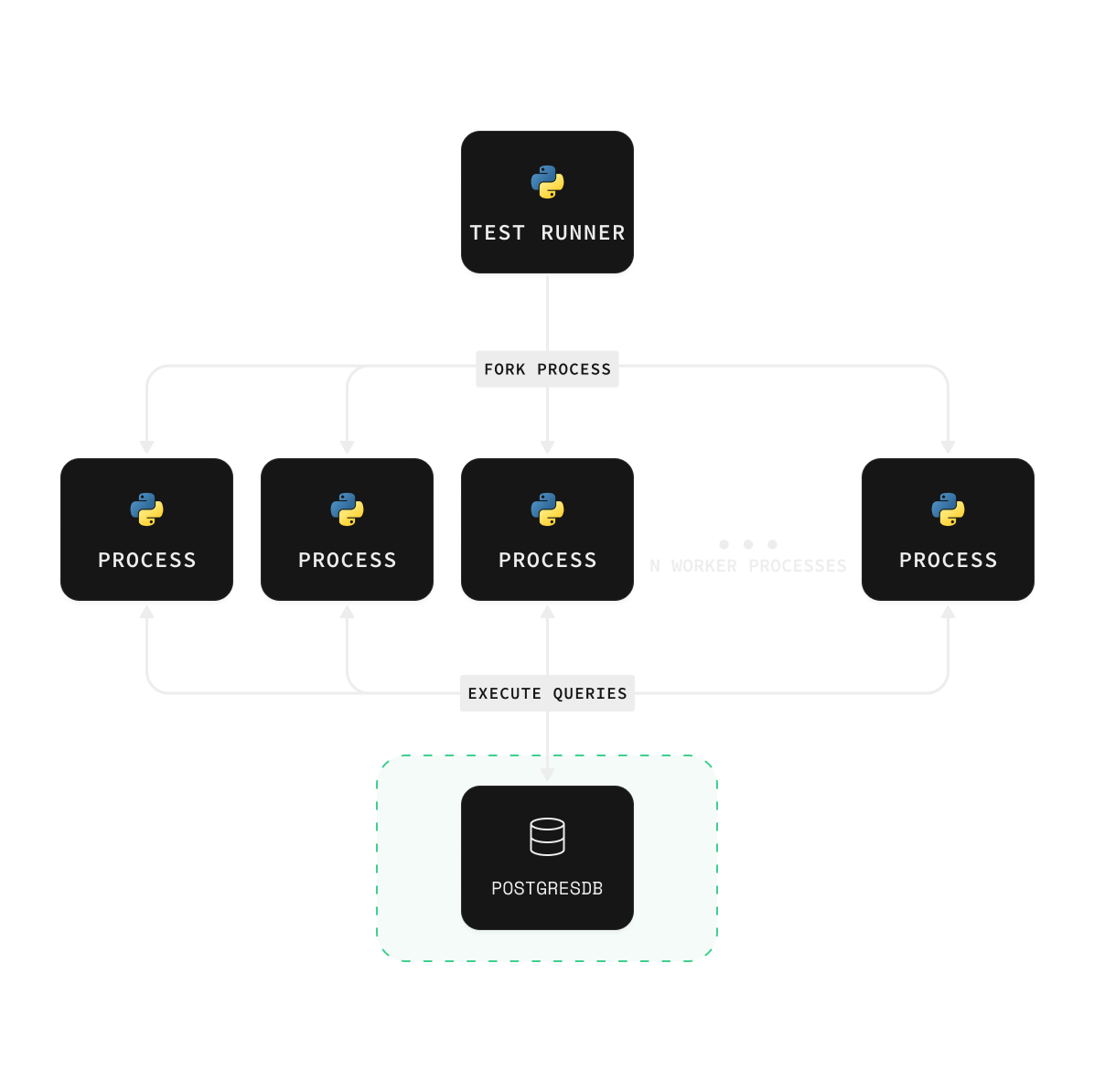

We follow techniques outlined in the ANN Benchmarks methodology. A Python test runner is responsible for uploading the data, creating the index, and running the queries. The pgvector engine is implemented using vecs, a Python client for pgvector.

Each test is run for a minimum of 30-40 minutes. They include a series of experiments executed at different concurrency levels to measure the engine's performance under different load types. The results are then averaged.

As a general recommendation, we suggest using a concurrency level of 5 or more for most workloads and 30 or more for high-load workloads.

Future benchmarks#

We'll continue to add more benchmarks on datasets consisting of different vector dimensions, number of lists in the index, and number of probes in the index. Stay tuned for more information about how it may affect the performance and precision of your queries.